Advertisement

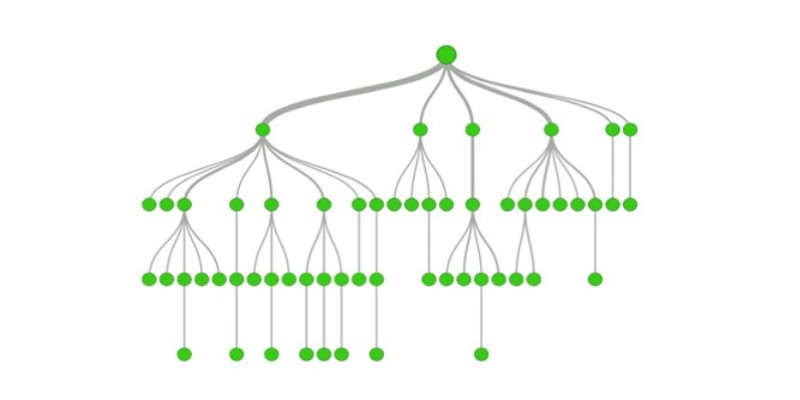

Decision trees might sound technical, but the core idea is surprisingly straightforward. They work like a series of yes-or-no questions, breaking data into smaller pieces until there’s a decision. That’s it. But what makes one decision tree better than another? It comes down to two things: how it decides to split and how we control its behavior using hyperparameters. Let's break both of those down without overcomplicating it.

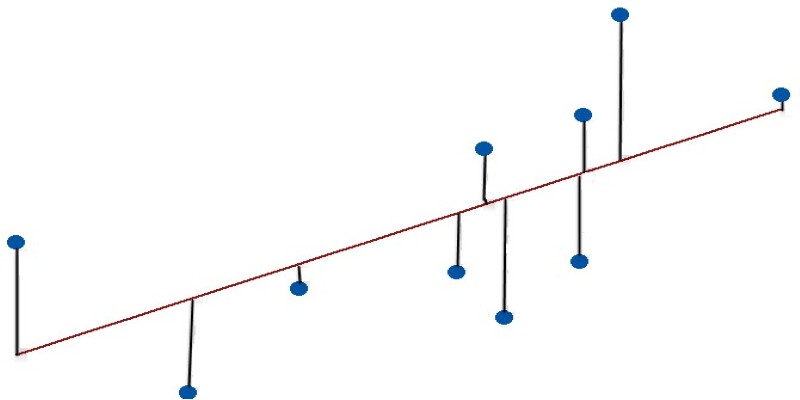

At the heart of every decision tree is a split — a simple rule like “Is age > 50?” The model keeps creating these rules at each step, working through the data, trying to build a path that leads to the right answer. But how does it choose the rule in the first place?

There’s no guesswork. It uses something called a split method — a way to measure how good a potential rule is. And there are a few different approaches.

This is a very common way, particularly with classification problems. Gini impurity considers how frequently you would be incorrect if you just blindly guessed the label in a collection. A clean collection — with all items from the same class — has Gini impurity 0. The more assorted the collection is, the more impure it is.

So when the tree looks at a possible split, it asks: does this split make the group purer? If the answer is yes, it's a contender.

Another option is entropy. The concept comes from information theory, and it measures disorder. Like Gini, entropy is 0 when the group is pure. But the calculation is slightly different. The tree uses a metric called "information gain" — how much uncertainty is reduced by making a split.

Some argue entropy is more precise, but Gini is faster to compute. In practice, they often produce very similar trees.

When you're not classifying but predicting numbers — say, house prices or temperature — the impurity measures change. In regression tasks, decision trees use methods like Mean Squared Error (MSE). It calculates how much the predictions deviate from actual values. The lower the error after a split, the better.

Once the tree knows how to split, the next big challenge is knowing when to stop. Left to itself, a decision tree will keep going until every data point is in its own group. Sounds great, but it’s not. That level of detail often leads to a model that only works well on the training data — and poorly on anything new. This is called overfitting.

So, we use hyperparameters to set boundaries.

This one's simple. How deep can the tree go? If it's allowed to grow too deep, it becomes too specific. If it's too shallow, it misses patterns. There's a balance. Tuning max_depth is a straightforward way to control the tree's complexity.

This decides the minimum number of samples needed to attempt a split. If it’s too small, the tree splits too often, chasing noise. If it’s higher, it’s more selective, forcing the model to find more meaningful divisions.

Slightly different from the one above — this controls the minimum number of samples allowed at a leaf (a final decision point). Why does this matter? It stops the model from creating tiny, overly specific rules that won’t generalize well.

This tells the model how many features it’s allowed to consider at each split. With all features, the tree might pick the most obvious ones every time, missing interesting patterns. Limiting this can introduce useful randomness, especially in ensemble methods like Random Forests.

Tuning hyperparameters isn’t about guessing. It’s a process of selecting combinations, testing how they perform, and adjusting. Start by being clear on your goal. Are you trying to maximize accuracy? Minimize mean squared error? Improve precision or recall? The metric you choose should match what matters for the task. Next, define a sensible range of values for each hyperparameter. You don’t need to test every number — just enough to see what works. For example:

These are starting points. The exact values depend on your data. Now it’s time to test. With cross-validation, you split the dataset into several parts, train on some, and test on the rest. This helps you see how the model behaves on different samples instead of relying on one outcome. Then comes the search.

Grid search tests every possible combination. It’s slow but thorough. Random search picks from the ranges at random. It’s faster and often effective, especially when only a few parameters matter. After testing, take the best-performing parameters and train a final model. Test it on new data. If performance holds, great — your tuning worked. If not, adjust and try again. Some models need more rounds before they perform reliably.

Decision trees aren’t always the best option — but when they’re good, they’re very good. They’re easy to understand, fast to train, and don’t need much data preparation. They can handle both numbers and categories, missing values, and mixed data types.

And if a single tree isn’t strong enough, it becomes the base for more advanced methods like Random Forests or Gradient Boosted Trees.

But even with these upgrades, everything still begins with one simple thing: the split. And knowing how those splits work — and how to keep them in check — is what makes a decision tree useful instead of just overfit noise.

Decision trees rely on two main things: the way they split the data and the rules that control how far they’re allowed to go. If you get both of these right, you end up with a model that’s clear, smart, and accurate. Not every problem needs a decision tree, but for the ones that do, these methods make all the difference.

Advertisement

Learn how Claude AI offers safe, reliable, and human-aligned AI support across writing, research, education, and conversation.

Explore 6 AI-powered note-taking apps that boost productivity, organize ideas, and capture meetings effortlessly.

Explore 6 ways ChatGPT enhances video game scriptwriting through dialogue, quest, and character development support.

DataRobot's Enterprise Suite helps businesses manage generative AI with governance, monitoring, and compliance for safe AI use

Can ChatGPT really solve math problems? Discover its accuracy in arithmetic, algebra, geometry, and calculus.

Explore 8 of the best AI-powered apps that enhance productivity and creativity on Android and iPhone devices.

Explore 8 strategic ways to use ChatGPT for content, emails, automation, and more to streamline your business operations.

How Microsoft Copilot lets you access GPT-4 Turbo for free. Learn step-by-step how to use Copilot for writing, research, coding, and more with this powerful AI tool

Explore the top 4 ChatGPT plugin store improvements users expect, from trust signals to better search and workflows.

Wondering whether TensorFlow or Keras fits your project better? Learn how they compare in simplicity, flexibility, performance, and real-world use cases

Discover how Emotion AI systems detect facial expressions, voice tone, and gestures to interpret emotional states.

Ever wondered what plugins come standard with ChatGPT and what they're actually useful for? Here's a clear look at the default tools built into ChatGPT and how you can make the most of them in everyday tasks